This blogpost is an excerpt of Springboard’s free guide to data science jobs and originally appeared on the Springboard blog.

Data Science Skills

Most data scientists use a combination of skills every day, some of which they have taught themselves on the job or otherwise. They also come from various backgrounds. There isn’t any one specific academic credential that data scientists are required to have.

All the skills discussed in this section can be self-learned. We’ve laid out some resources to get you started down that path. Consider it a guide on how to become a data scientist.

Mathematics

Mathematics is an important part of data science. Make sure you know the basics of university math from calculus to linear algebra. The more math you know, the better.

When data gets large, it often gets unwieldy. You’ll have to use mathematics to process and structure the data you’re dealing with.

You won’t be able to get out of knowing calculus, and linear algebra if you missed those topics in undergrad. You’ll need to understand how to manipulate matrices of data and get a general idea behind the math of algorithms.

Resources: This list of 15 Mathematics MOOC courses can help you catch up with math skills. MIT also offers an open course specifically on the mathematics of data science.

Statistics

You must know statistics to infer insights from smaller data sets onto larger populations. This is the fundamental law of data science. Statistics will pave your path on how to become a data scientist.

You need to know statistics to play with data. Statistics allows you to better understand patterns observed in data, and extract the insights you need to make reasonable conclusions. For instance, understanding inferential statistics will help you make general conclusions about everybody in a population from a smaller sample.

To understand data science you must know the basics of hypothesis testing, and design experiments to understand the meaning and context of your data.

Resources: Our blog published a primer on how Bayes Theorem, probability and stats intersect with one another. The post forms a good basis for understanding the statistical foundation of how to become a data scientist.

Algorithms

Algorithms are the ability to make computers follow a certain set of rules or patterns. Understanding how to use machines to do your work is essential to processing and analyzing data sets too large for the human mind to process.

In order for you to do any heavy lifting in data science, you’ll have to understand the theory behind algorithm selection and optimization. You’ll have to decide whether or not your problem demands a regression analysis, or an algorithm that helps classify different data points into defined categories.

You’ll want to know many different algorithms. You’ll also want to learn the fundamentals of machine learning. Machine learning is what allows for Amazon to recommend you products based on your purchase history without any direct human intervention. It is a set of algorithms that will use machine power to unearth insights for you.

To deal with massive datasets you’ll need to use machines to extend your thinking.

Resources: This guide by KDNuggets helps explain ten common data science algorithms in plain English. Here are 19 free public datasets so you can practice implementing different algorithms on data.

Data Visualization

Finishing your data analysis is only half the battle. To drive impact, you will have to convince others to believe and adopt your insights.

Human beings are visual creatures. According to 3M and Zabisco, almost 90% of the information transmitted to your brain is visual in nature, and visuals are processed 60,000 times faster than text.

Data visualization is the art of presenting information through charts and other visual tools, so that the audience can easily interpret the data and draw insights from it. What information is best presented in a bar chart and what types of data should we present in a scatter plot?

Human beings are wired to respond to visual cues. The better you can present your data insights, the more likely it is that someone will take action based on them.

Resources: We’ve got a list of 31 free data visualization tools you can play around with. Nathan Yau’s FlowingData blog is filled with data visualization tips and tricks that will bring you to the next level.

Business Knowledge

Data means little without its context. You have to understand the business you’re analyzing. Clarity is the centerpiece of how to become a data scientist.

Most companies depend on their data scientists not just to mine data sets, but also to communicate their results to various stakeholders and present recommendations that can be acted upon.

The best data scientists not only have the ability to work with large, complex data sets, but also understand intricacies of the business or organization they work for.

Having general business knowledge allows them to ask the right questions, and come up with insightful solutions and recommendations that are actually feasible given any constraints that the business might impose.

Resources: This list of free business courses can help you gain the knowledge you need. Our Data Analytics for Business course can help you skill up on this dimension with a mentor.

Domain Expertise

As a data scientist, you should know the business you work for and the industry it lives in.

Beyond having deep knowledge of the company you work for, you’ll also have to understand the field it works in for your business insights to make sense. Data from a biology study can have a drastically different context than data gleaned from a well-designed psychology study. You should know enough to cut through industry jargon.

Resources: This will be largely industry-dependent. You’ll have to find your own way and learn as much about your industry as possible!

Data Science Tools

Analytical Mind

You’ll need an analytical mindset to do well in data science. A lot of data science involves solving problems with a sharp and keen mind.

Resources: Keep your mind sharp with books and puzzles. A site like Lumosity can help make sure you’re cognitively sharp at all times.

With your skill set developed, you’ll now need to learn how to use modern data science tools. Each tool has its strengths and weaknesses, and each plays a different role in the data science process. You can use one of them, or you can use all of them. What follows is a broad overview of the most popular tools in data science as well as the resources you’ll need to learn them properly if you want to dive deeper.

File Formats

Data can be stored in different file formats. Here are some of the most common:

CSV: Comma separated values. You may have opened this sort of file with Excel before. CSVs separate out data with a delimiter, a piece of punctuation that serves to separate out different data points.

SQL: SQL, or structured query language, stores data in relational tables. If you go from the right to a column to the left, you’ll get different data points on the same entity (for example, a person will have a value in the AGE, GENDER, and HEIGHT categories).

JSON: Javascript Object Notation is a lightweight data exchange format that is both human and machine-readable. Data from a web server is often transmitted in this format.

Excel

Introduction to Excel: Excel is often the gateway to data science, and something that every data scientist can benefit from learning.

Excel allows you to easily manipulate data with what is essentially a What You See Is What You Get editor that allows you to perform equations on data without working in code at all. It is a handy tool for data analysts who want to get results without programming.

Excel is easy to get started with, and it’s a program that anybody who is in analytics will intuitively grasp. It can be useful to communicate data to people who may not have any programming skills: they should still be able to play with the data.

Who Uses This: Data analysts tend to use Excel.

Level of Difficulty Beginner

Sample Project: Importing a small dataset on the statistics of NBA players and making a simple graph of the top scorers in the league

SQL

Introduction to SQL: SQL is the most popular programming language to find data.

Data science needs data. SQL is a programming language specially designed to extract data from databases.

SQL is the most popular tool used by data scientists. Most data in the world is stored in tables that will require SQL to access. You’ll be able to filter and sort through the data with it.

Who Uses This: Data analysts and some data engineers tend to use SQL.

Level of Difficulty: Beginner

Sample Project: Using a query to select the top ten most popular songs from a SQL database of the Billboard 100.

Python

Introduction to Python Python is a powerful, versatile programming language for data science.

Once you download Rodeo, Yhat’s Python IDE, you’ll quickly realize how intuitive Python is. A versatile programming language built for everything from building websites to gathering data from across the web, Python has many code libraries dedicated to making data science work easier.

Python is a versatile programming language with a simple syntax that is easy to learn.

The average salary range for jobs with Python in their description is around $102,000. Python is the most popular programming language taught in universities: the community of Python programmers is only going to be larger in the years to come. The Python community is passionate about teaching Python, and building useful tools that will save you time and allow you to do more with your data.

Many data scientists use Python to solve their problems: 40% of respondents to a definitive data science survey conducted by O’Reilly used Python, which was more than the 36% who used Excel.

Who Uses This: Data engineers and data scientists will use Python for medium-size data sets.

Level of Difficulty: Intermediate

Sample Project: Using Python to source tweets from celebrities, then doing an analysis of the most frequent words used by applying programming rules.

R

Introduction to R: R is a staple in the data science community because it is designed explicitly for data science needs. It is the most popular programming environment in data science with 43% of data professionals using it.

R is a programming environment designed for data analysis. R shines when it comes to building statistical models and displaying the results.

R is an environment where a wide variety of statistical and graphing techniques can be applied.

The community contributes packages that, similar to Python, can extend the core functions of the R codebase so that it can be applied to specific problems such as measuring financial metrics or analyzing climate data.

Who Uses This: Data engineers and data scientists will use R for medium-size data sets.

Level of Difficulty: Intermediate

Sample Project: Using R to graph stock market movements over the last five years.

Big Data Tools

Big data comes from Moore’s Law, a theory that computing power doubles every two years. This has led to the rise of massive data sets generated by millions of computers. Imagine how much data Facebook has at any given time!

Any data set that is too large for conventional data tools such as SQL and Excel can be considered big data, according to McKinsey. The simplest definition is that big data is data that can’t fit onto your computer.

Here are tools to solve that problem:

Hadoop

Introduction to Hadoop: By using Hadoop, you can store your data in multiple servers while controlling it from one.

The solution is a technology called MapReduce. MapReduce is an elegant abstraction that treats a series of computers as it were one central server. This allows you to store data on multiple computers, but process it through one.

Hadoop is an open-source ecosystem of tools that allow you to MapReduce your data and store enormous datasets on different servers. It allows you to manage much more data than you can on a single computer.

Who Uses This: Data engineers and data scientists will use Hadoop to handle big data sets.

Level of Difficulty:Advanced

Sample Project: Using Hadoop to store massive datasets that update in real time, such as the number of likes Facebook users generate.

NoSQL

Introduction to NoSQL: NoSQL allows you to manage data without unneeded weight.

Tables that bring all their data with them can become cumbersome. NoSQL includes a host of data storage solutions that separate out huge data sets into manageable chunks.

NoSQL was a trend pioneered by Google to deal with the impossibly large amounts of data they were storing. Often structured in the JSON format popular with web developers, solutions like MongoDB have created databases that can be manipulated like SQL tables, but which can store the data with less structure and density.

Who Uses This: Data engineers and data scientists will use NoSQL for big data sets, often website databases for millions of users.

Level of Difficulty: Advanced

Sample Project: Storing data on users of a social media application that is deployed on the web.

Bringing It All Together: Tools in the Data Science Process

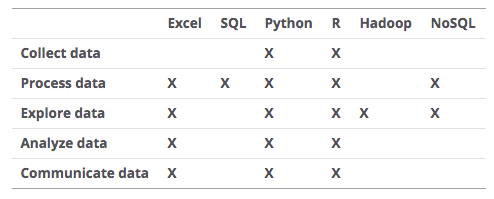

Each one of the tools we’ve described is complementary. They each have their strengths and weaknesses, and each one can be applied to different stages in the data science process.

Collect Data

Sometimes it isn’t doing the data analysis that is hard, but finding the data you need. Thankfully, there are many resources.

You can create datasets by taking data from what is called an API or an application programming interface that allows you to take structured data from certain providers. You’ll be able to query all kinds of data from Twitter, Facebook, and Instagram among others.

If you want to play around with public datasets, the United States government has made some free to all. The most popular datasets are tracked on Reddit. Dataset search engines such as Quandl allow you to search for the perfect dataset.

Springboard has compiled 19 of our favorite public datasets on our blog to help you out in case you ever need good data right away.

Looking for something a little less serious? Check out Yhat’s 7 Datasets You’ve Likely Never Seen Before, including one on pigeon racing!

Python supports most data formats. You can play with CSVs or you can play with JSON sourced from the web. You can import SQL tables directly into your code.

You can also create datasets from the web. The Python requests library scrapes data from different websites with a line of code. You’ll be able to take data from Wikipedia tables, and once you’ve cleaned the data with the beautifulsoup library, you’ll be able to analyze them in-depth.

R can take data from Excel, CSV, and from text files. Files built in Minitab or in SPSS format can be turned into R dataframes.

The Rvest package will allow you to perform basic web scraping, while magrittr will clean and parse the information for you. These packages are similar to the requests and beautifulsoup libraries in Python.

Process Data

Excel allows you to easily clean data with menu functions that can clean duplicate values, filter and sort columns, and delete rows or columns of data.

SQL has basic filtering and sorting functions so you can source exactly the data you need. You can also update SQL tables and clean certain values from them.

Python uses the Pandas library for data analysis. It is much quicker to process larger data sets than Excel, and has more functionality.

You can clean data by applying programmatic methods to the data with Pandas. You can, for example, replace every error value in the dataset with a default value such as zero in one line of code.

R can help you add columns of information, reshape, and transform the data itself. Many of the newer R libraries such as reshape2 allow you to play with different data frames and make them fit the criterion you’ve set.

NoSQL allows you the ability to subset large data sets and to change data according to your will, which you can use to clean through your data.

Explore Data

Excel can add columns together, get the averages, and do basic statistical and numerical analysis with pre-built functions.

Python and Pandas can take complex rules and apply them to data so you can easily spot high-level trends.

You’ll be able to do deep time series analysis in Pandas. You could track variations in stock prices to their finest detail.

R was built to do statistical and numerical analysis of large data sets. You’ll be able to build probability distributions, apply a variety of statistical tests to your data, and use standard machine learning and data mining techniques.

NoSQL and Hadoop both allow you to explore data on a similar level to SQL.

Amalyze data

Excel can analyze data at an advanced level. Use pivot tables that display your data dynamically, advanced formulas, or macro scripts that allow you to programmatically go through your data.

Python has a numeric analysis library: Numpy. You can do scientific computing and calculation with SciPy. You can access a lot of pre-built machine learning algorithms with the scikit-learn code library.

R has plenty of packages out there for specific analyses such as the Poisson distribution and mixtures of probability laws.

Communicate Data

Excel has basic chart and plotting functionality. You can easily build dashboards and dynamic charts that will update as soon as somebody changes the underlying data.

Python has a lot of powerful options to visualize data. You can use the Matplotlib library to generate basic graphs and charts from the data embedded in your Python. If you want something that’s a bit more advanced, you could try Plot.ly and its Python API.

You can also use the nbconvert function to turn your Python notebooks into HTML documents. This can help you embed snippets of code into interactive websites or your online portfolio. Many people have used this function to create online tutorials on how to learn Python.

R was built to do statistical analysis and demonstrate the results. It’s a powerful environment suited to scientific visualization with many packages that specialize in graphical display of results. The base graphics module allows you to make all of the basic charts and plots you’d like from data matrices. You can then save these files into image formats such as jpg., or you can save them as separate PDFs. You can use ggplot2 for more advanced plots such as complex scatter plots with regression lines.

Starting Your Job Search

Now that you’ve gotten an idea of the skills and tools you need to know to get into data science and how to become a data scientist, it’s time to apply that theory to the practice of applying for data science jobs.

Build a Data Science Portfolio and Resume

You need to make a great first impression to break into data science. That starts with your portfolio and your resume. Many data scientists have their own website which serves as both a repository of their work and a blog of their thoughts.

This allows them to demonstrate their experience and the value they create in the data science community. In order for your portfolio to have the same effect, it must have the following traits:

– Your portfolio should highlight your best projects. Focusing on a few memorable projects is generally better than showing a large number of dilute projects. – It must be well-designed, and tell a captivating story of who you are beyond your work. – You should build value for your visitors by highlighting any impact you’ve had through your work. Maybe you built a tool that’s useful for everyone? Perhaps you have a tutorial? Showcase them here. – It should be easy to find your contact information.

Take a look at our mentor Sundeep Pattem’s personal portfolio for example projects.

He’s worked on complex data problems that resonate in the real world. He has five projects dealing with healthcare costs, labor markets, energy sustainability, online education, and world economies, fields where there are plenty of data problems to solve.

These projects are independent of any workplace. They show that Sundeep innately enjoys creating solutions to complex problems with data science.

If you’re short on project ideas, you can participate in data science competitions. Platforms like Kaggle, Datakind and Datadriven allow you to work with real corporate or social problems. By using your data science skills, you can show your ability to make a difference, and create the strongest portfolio asset of all: a demonstrated bias to action.

Where to Find Jobs

- Kaggle offers a job board for data scientists. – You can find a list of open data scientist jobs at Indeed, the search engine for jobs. – Datajobs offers a listings site for data science. It’s a great place to see how to become a data scientist.

You can also find opportunities through networking and through finding a mentor. We continue to emphasize that the best job positions are often found by talking to people within the data science community. That’s how you become a data scientist.

You’ll also be able to find opportunities for employment in startup forums. Hacker News has a job board that is exclusive to Y Combinator startups (perhaps the most prestigious startup accelerator in the world). Angellist is a database for startups looking to get funding and it has a jobs section.

Ace the Data Science Interview

An entire book can be written on the data science interview–in fact, we did just that!

If you get an interview, what do you do next? There are several kinds of questions that are always asked in a data science interview: your background, coding questions, and applied machine learning questions. You should always anticipate a mixture of technical and non-technical questions in any data science interview. Make sure you brush up on your programming and data science–and try to interweave it with your own personal story!

You’ll also often be asked to analyze datasets. You’ll likely be asked culture fit and stats questions. To prepare for the coding questions, you’ll have to treat interviews on data science partly as a software engineering exercise. You should brush up on all coding interview resources, a lot of which are around online. Here is a list of data science questions you might encounter. Among some of these questions, you’ll see common ones like:

Among some of these questions, you’ll see common ones like:

- Python vs R: which language do you prefer for [x] situation?

- What is K-means (a specific type of data science algorithm)? Describe when you would use it.

- Tell me a bit about the last data science project you worked on.

- What do you know about the key growth drivers for our business?

The first type of question tests your programming knowledge. The second type of question tests what you know about data science algorithms, and makes you share your real-life experience with them. The third question is a deep dive into the work you’ve done with data science before. Finally, the fourth type of question will test how much you know about the business you’re interviewing with.

If you can demonstrate how your data science work can help move the needle for your potential employers, you’ll impress them. They’ll know they have somebody who cares enough to look into what they’re doing, and who knows enough about the industry that they don’t have to teach you much. And that’s how to become a data scientist.

About Springboard

Visit Springboard to find out more about Springboard’s data science career track with a guaranteed job offer and hand-picked mentors!