Docker is a containerization tool used for spinning up isolated, reproducible application environments. This piece details how to containerize a Django Project, Postgres, and Redis for local development along with delivering the stack to the cloud via Docker Compose and Docker Machine.

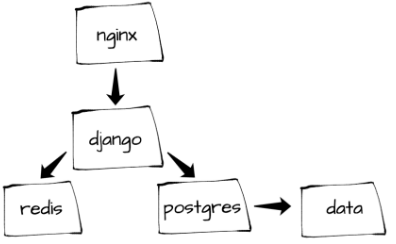

In the end, the stack will include a separate container for each service:

- 1 web/Django container

- 1 nginx container

- 1 Postgres container

- 1 Redis container

- 1 data container

Updates:

- 12/27/2015: Updated to the latest versions of Docker – Docker client (v1.9.1), Docker compose (v1.5.2), and Docker Machine (v0.5.4) – and Python (v3.5)

Interested in creating a similar environment for Flask? Check out this blog post.

Local Setup

Along with Docker (v1.9.1) we will be using –

- Docker Compose (v1.5.2) for orchestrating a multi-container application into a single app, and

- Docker Machine (v0.5.4) for creating Docker hosts both locally and in the cloud.

Follow the directions here and here to install Docker Compose and Machine, respectively.

Running either Mac OS X or Windows, then your best bet is to install Docker Toolbox.

Test out the installs:

1 2 3 4 |

|

Next clone the project from the repository or create your own project based on the project structure found on the repo:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

|

We’re now ready to get the containers up and running…

Docker Machine

To start Docker Machine, simply navigate to the project root and then run:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

|

The create command set up a new “Machine” (called dev) for Docker development. In essence, it started a VM with Docker running. Now just point Docker at the dev machine:

1

|

|

Run the following command to view the currently running Machines:

1 2 3 |

|

Next, let’s fire up the containers with Docker Compose and get Django, Postgres, and Redis up and running.

Docker Compose

Let’s take a look at the docker-compose.yml file:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 |

|

Here, we’re defining five services – web, nginx, postgres, redis, and data.

- First, the web service is built via the instructions in the Dockerfile within the “web” directory – where the Python environment is setup, requirements are installed, and the Django application is fired up on port 8000. That port is then forwarded to port 80 on the host environment – e.g., the Docker Machine. This service also adds environment variables to the container that are defined in the .env file.

- The nginx service is used for reverse proxy to forward requests either to Django or the static file directory.

- Next, the postgres service is built from the the official PostgreSQL image from Docker Hub, which installs Postgres and runs the server on the default port 5432.

- Likewise, the redis service uses the official Redis image to install, well, Redis and then the service is ran on port 6379.

- Finally, notice how there is a separate volume container that’s used to store the database data – called data. This helps ensure that the data persists even if the Postgres container is completely destroyed.

Now, to get the containers running, build the images and then start the services:

1 2 |

|

Grab a cup of coffee. Or go for a long walk. This will take a while the first time you run it. Subsequent builds run much quicker since Docker caches the results from the first build.

Once the services are running, we need to create the database migrations:

1

|

|

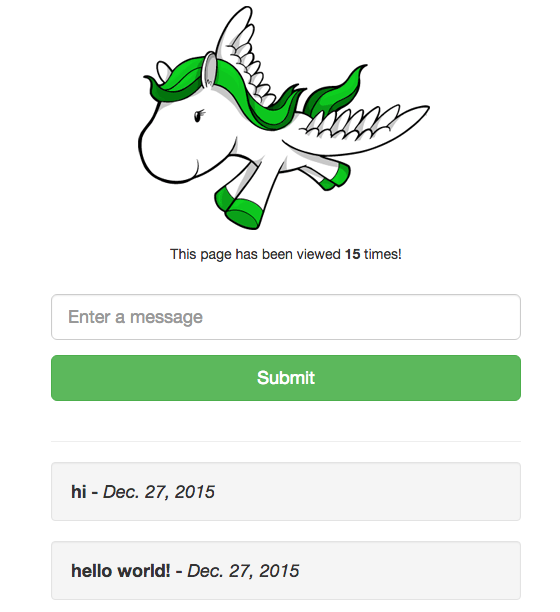

Grab the IP associated with Docker Machine – docker-machine ip dev – and then navigate to that IP in your browser:

Nice!

Try refreshing. You should see the counter update. Essentially, we’re using the Redis INCR to increment after each handled request. Check out the code in web/docker_django/apps/todo/views.py for more info.

Again, this created five services, all running in different containers:

1 2 3 4 5 6 7 8 |

|

To see which environment variables are available to the web service, run:

1

|

|

To view the logs:

1

|

|

You can also enter the Postgres Shell – since we forwarded the port to the host environment in the docker-compose.yml file – to add users/roles as well as databases via:

1

|

|

Ready to deploy? Stop the processes via docker-compose stop and let’s get the app up in the cloud!

Deployment

So, with our app running locally, we can now push this exact same environment to a cloud hosting provider with Docker Machine. Let’s deploy to a Digital Ocean box.

After you sign up for Digital Ocean, generate a Personal Access Token, and then run the following command:

1 2 3 4 |

|

This will take a few minutes to provision the droplet and setup a new Docker Machine called production:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

|

Now we have two Machines running, one locally and one on Digital Ocean:

1 2 3 4 |

|

Set production as the active machine and load the Docker environment into the shell:

1

|

|

Finally, let’s build the Django app again in the cloud. This time we need to use a slightly different Docker Compose file that does not mount a volume in the container. Why? Well, the volume is perfect for local development since we can update our local code in the “web” directory and the changes will immediately take affect in the container. In production, there’s no need for this, obviously.

1 2 3 |

|

Did you notice how we specified a different config file for production? What if you wanted to also run collectstatic? See this issue.

Grab the IP address associated with that Digital Ocean account and view it in the browser. If all went well, you should see your app running, as it should.