Conversational UI has always been a reach goal for technologists. Its sheer presence in science-fiction movies alone is an indicator of how much we as a society value this mode of interaction. There are many reasons for this. From an early age, we’re taught how to interact with each other via conversation – wouldn’t it be great if computers could understand us instead of us learning how to use them?

This notion of “smart bots” came quickly this year, and came to stay. Facebook released their own bot platform, Microsoft has a platform for building them, and we’re starting to see independent vendors pop up like wit.ai and api.ai. Developers want to create them, and users want to use them.

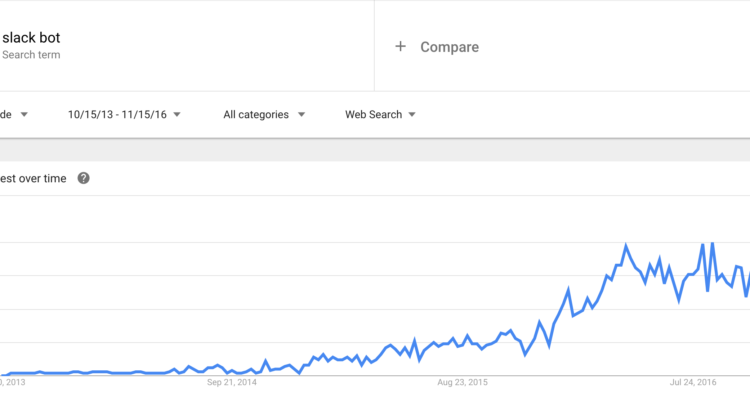

Perhaps Slack was an instigator in this trend too; it’s had open APIs allowing users to create bots since its inception, and with its recent surge in popularity, it’s no wonder why everyone is “bot thirsty.”

So where does this leave us? With a rise in popularity and increased interest in creating bots, what are the best ways to do so?

Unfortunately, there’s no clear cut answer on this one. Everyone is releasing their own proprietary platform with unique, incompatible APIs, with different levels of ease vs customizability. At the end of the day, creating bots, and creating natural conversation flows is an incredibly challenging process. There’s a reason why the most used Siri feature is setting timers and the most used Alexa feature is playing music. Creating richer experiences beyond simple command-and-respond actions is quite difficult.

And despite the the plethora of frameworks and platforms, none of them present compelling ways to debug your bot-conversation (botversation™) on a system level. How do we determine what happened when you ask your bot about “music” and it responds with answers about “mac and cheese”?

When something goes wrong, we want to gain introspection into the system, which (hopefully) will allow us to more easily debug. We don’t want to only see “you said this, so we said that” but rather a whole linked set of asynchronous events that follow your speech utterance and intent lifecycle, allowing us to see exactly what happened behind the scenes and enabling us to target exactly what went wrong.

Utilizing a tool of this nature will solve common pain points developers often face when setting up intricate conversation workflows. The fewer pain points there are, the faster users can get up and running to create awesome conversational bots.

Enough of the talk, let’s look at a tool we’ve developed to address this. We’ll be walking through a common issue faced when setting up a conversation: “I wanted my bot to respond with ___ but instead it responded with ___.”

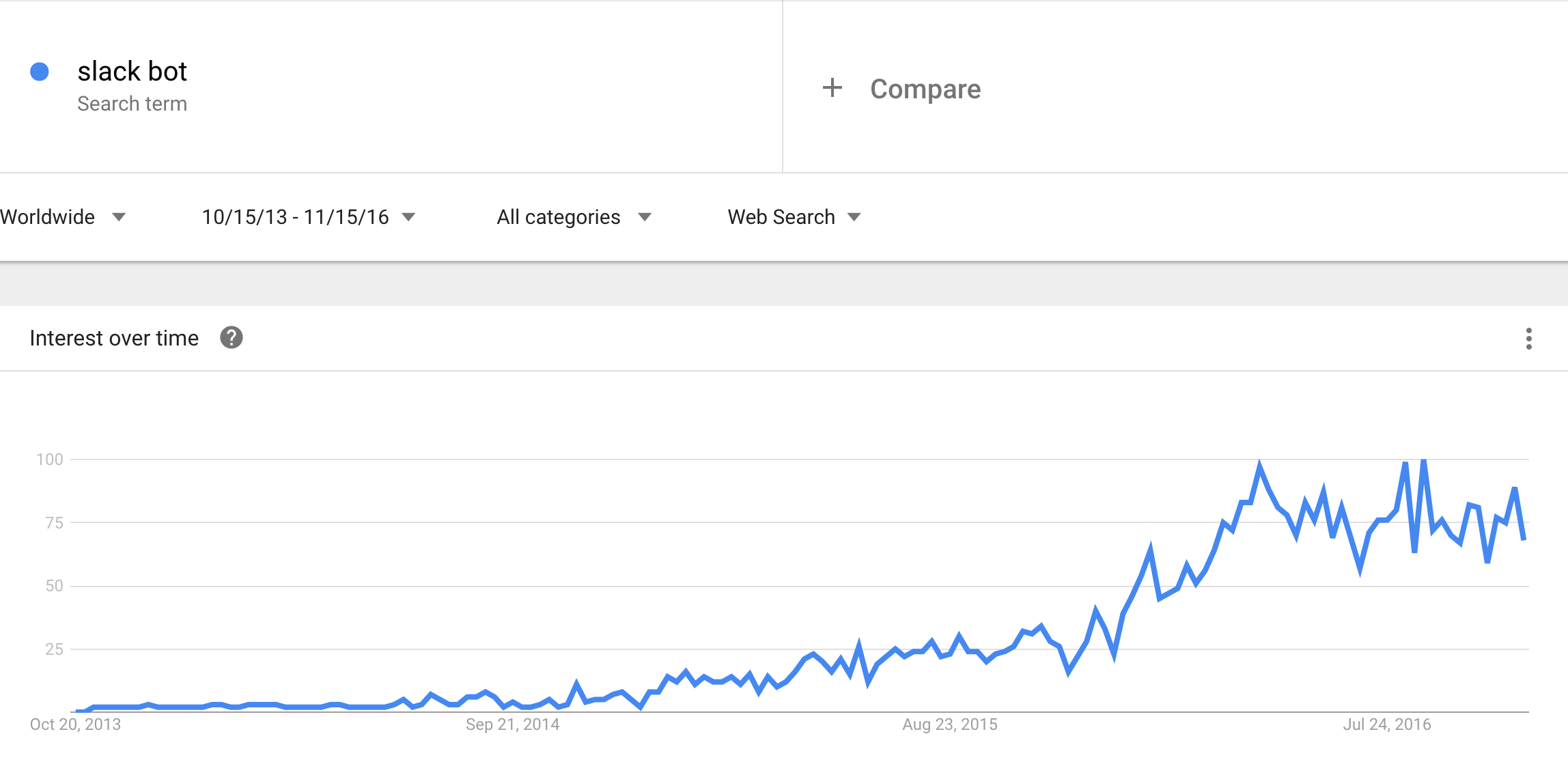

I submit that while the following might be pretty and a good record of your interactions with a system, it wouldn’t help you figure out what went wrong:

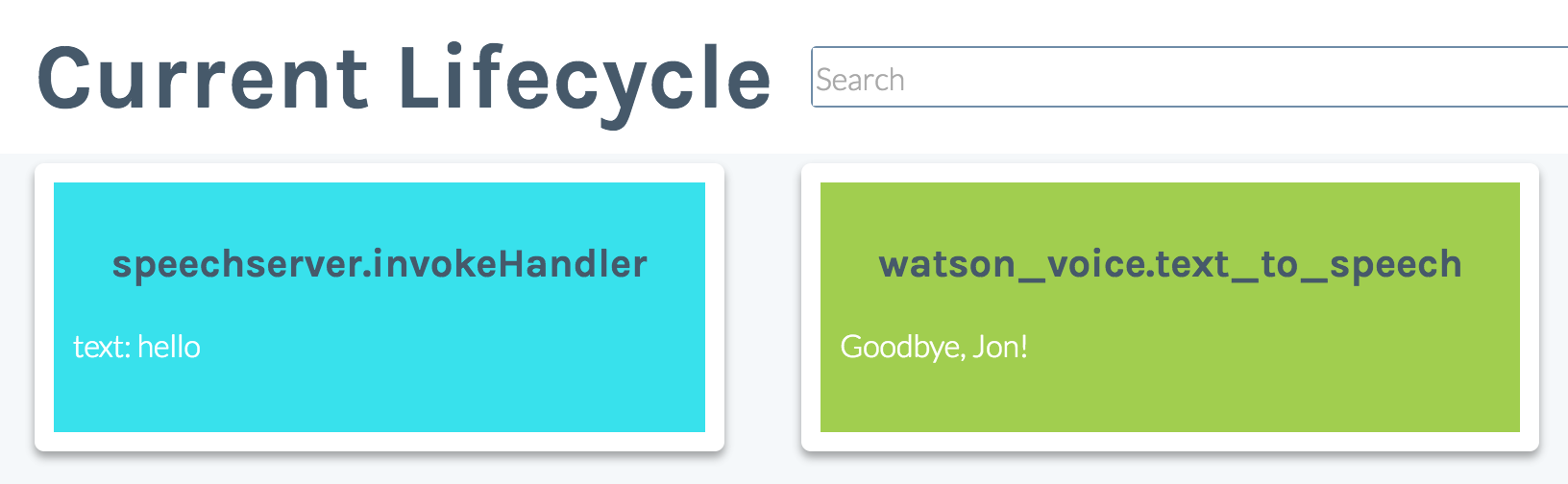

If instead we saw an event lifecycle indicating to us what actions the input text invoked, we would have a greater sense of why the system produced that output text:

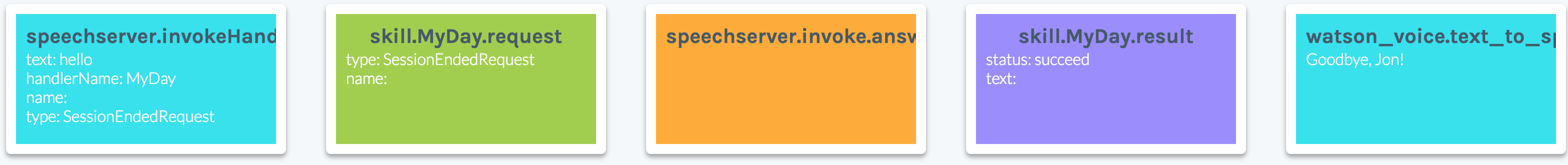

With this new information we can see that for some reason our input “hello” text triggered a SessionEndedRequest. This is no good! What if we could retrain the system from this view to tell it “hey! don’t do that! when I say this, I actually want you to invoke something else!” This looks like:

Which allows to select the correct skill and intent for our desired “hello” response:

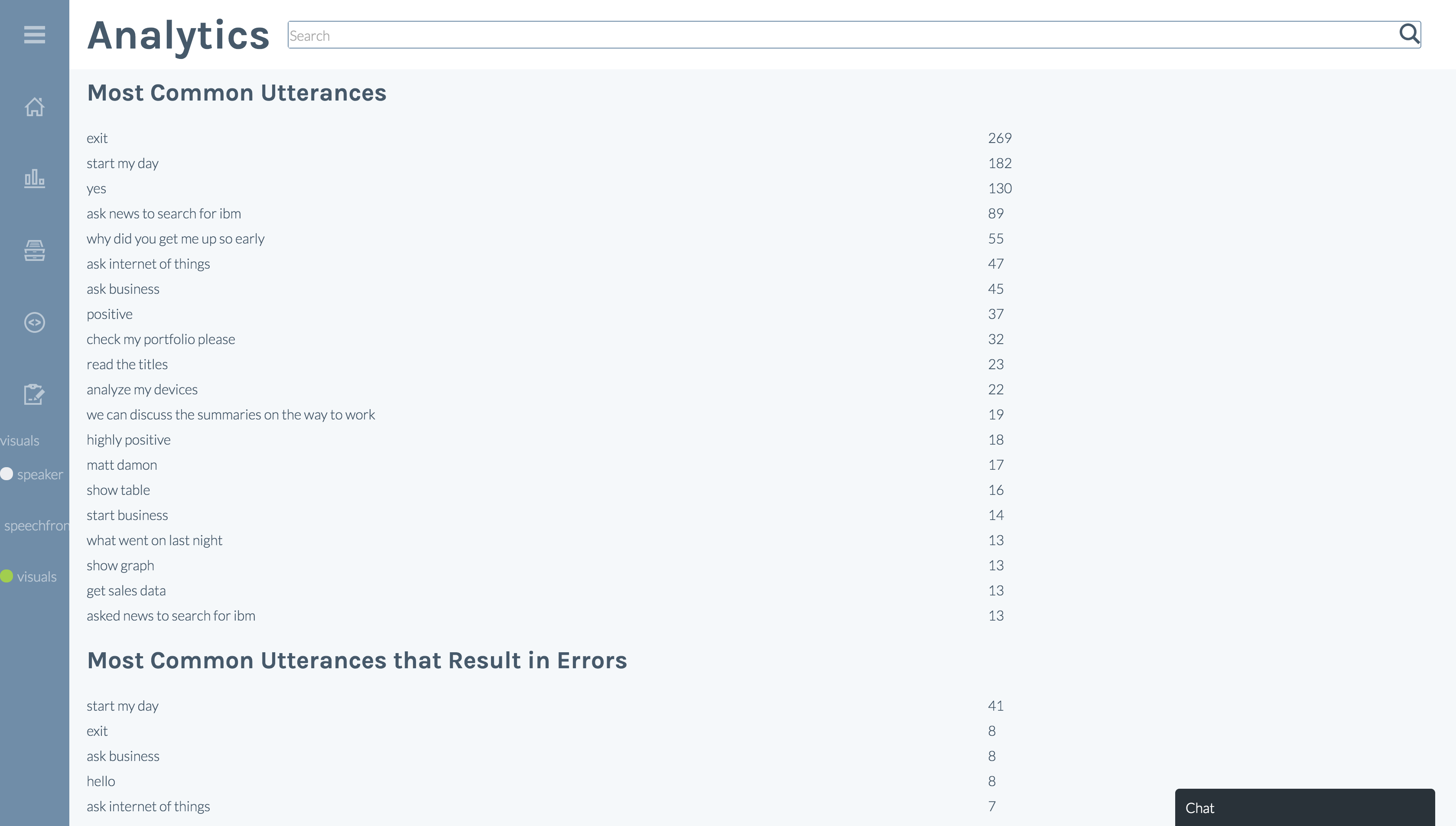

Once we have this framework in place, we need a more convenient way of finding out what went wrong. We can perform statistics and analytics on our recorded lifecycle dataset in order to identify what are the most common things that were said, and which of those resulted in the most errors. We can also perform similar metrics around skill sets and actions.

Throwing this all together in a single tool enhances the developer experience when adding new skill sets and utterances. It is by no means perfect, but rather a start. Allowing developers to take ownership of the skills that they add and what functions those skills invoke, while giving them introspection into the asynchronous system events, creates a rather powerful developer experience.

Gone are the days of pouring over system logs. Empowering the developers empowers our users. By focusing on the developer experience when creating bot frameworks, we ultimately create a richer user experience, and the conversational UI we have always dreamed of.